The Beginning of the End of Misinformation?

June 18, 2025Categories: AI, Fact-checking, Reason, Truth, Misinformation

The Dr. Bo Show with Bo Bennett, PhD

The Dr. Bo Show is a critical thinking-, reason-, and science-based approach to issues that matter. It is the podcast of social psychologist Bo Bennett. This podcast is a collection of topics related to all of his books. The podcast episodes, depending on the episode, are hosted by either Dr. Bennett or Jerry Sage, discussing the work of Dr. Bennett.

As the world wallows in doom and gloom about how the Internet—more specifically, AI—will bring about an age of lies, fake news, disinformation, and misinformation, I argue that we have reason for hope. Hope that, rather than leading us into an informational apocalypse, AI may usher in a golden age of information—one where truth prevails. And this isn't some pie-in-the-sky wish; it's something already beginning to happen. Best of all, you can help make this golden age a reality. In this post, I’ll explain how.

Let’s start with a basic understanding of how information on the Internet functions: people and organizations who share information online are rewarded for virality—not for truth. Please read that again.

Why is this the case? In short, human nature. We are moved to action primarily by emotion, not reason, logic, or even truth. Worse still, a disturbingly large number of people care more about how consuming information makes them feel than whether it’s true. To many content creators, we are not humans—we are “likes,” “shares,” and “comments.” We are tools, or “useful idiots,” who react emotionally and fail to verify what we see. This emotional engagement gives others power—power derived from our attention and interaction.

We do this largely because of confirmation bias: we uncritically accept information that aligns with our beliefs and reject what contradicts them. Social media platforms, mainstream media, and especially “independent media” (often social media influencers) exploit this by creating content designed for emotional reaction, not factual accuracy.

If I’ve done my job, you now understand that information shared on the internet is, by default, untrustworthy—even from sources you may have trusted due to a track record of accuracy (yes, myself included).

Now, let me explain why it’s just as important to fact-check information that supports your ideology as it is to debunk what goes against it. One word: credibility. People gravitate toward truth over time. The more your side is caught exaggerating, distorting, or lying, the less credible it becomes—and by extension, so does the ideology. Spreading misinformation makes you an easy target, distracting from the real issues and injustices that deserve our attention.

AI To The Rescue

Surprisingly few people realize that you can upload a meme or paste a link into an AI tool and simply ask, “Please fact-check this.” Depending on the model, results may take seconds or minutes. And the accuracy of the latest AI tools often exceeds even the most skilled online researchers.

I’ll demonstrate this using the tool I use most often: ChatGPT.

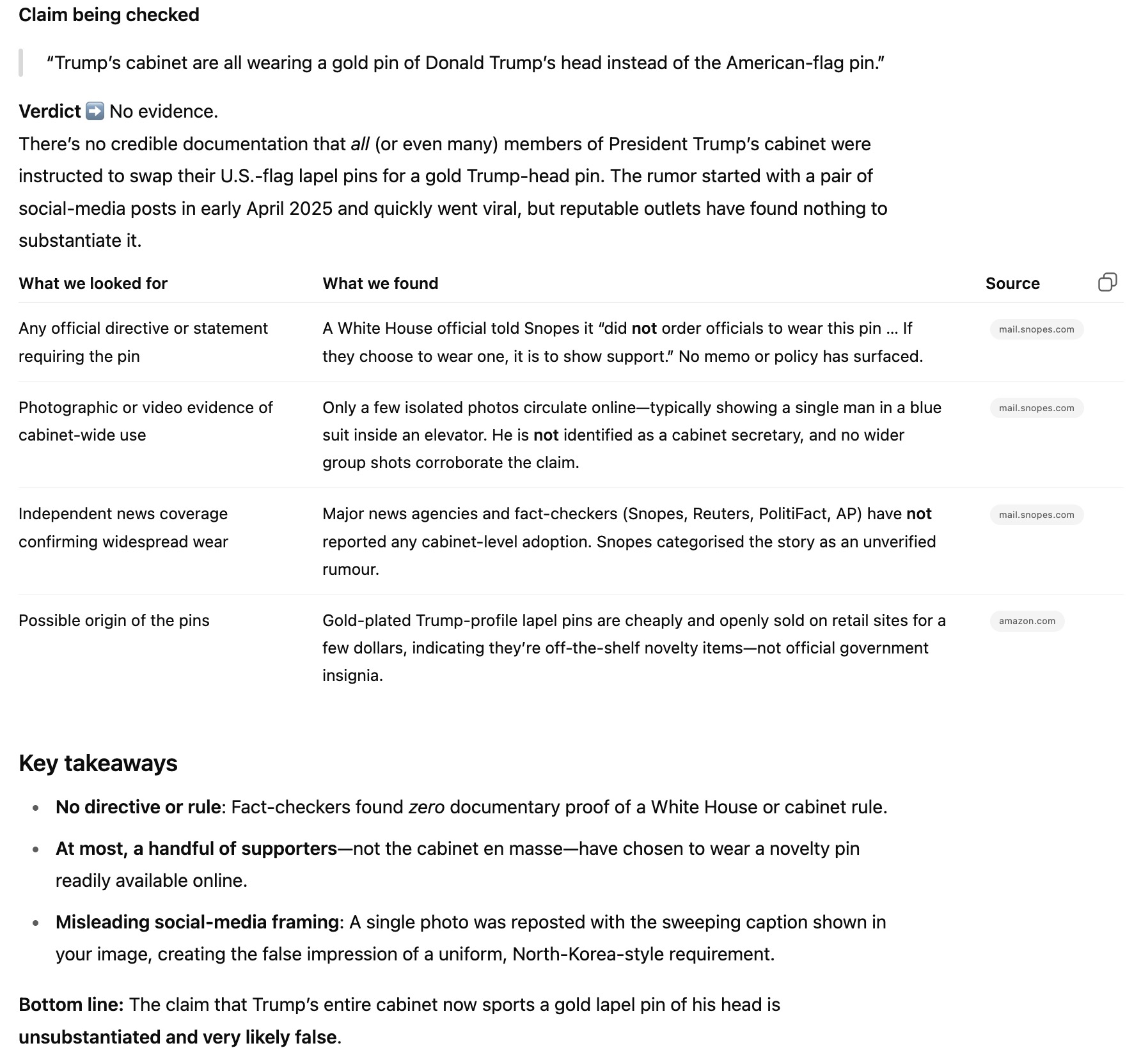

Here’s an image post I saw on the “Occupy Democrats” Facebook page:

As someone who isn’t a fan of Trump, my immediate reaction was anger—maybe even fury. That’s exactly what the post was designed to provoke. Angry users will react with emojis, comment with things like “What a cult!” or worse, share the post further.

Let me be clear: if this post is true, people should be outraged. But remember, posts are made for virality, not truth.

Fact-checking this using ChatGPT took just seconds. Using the advanced “o3” model, I saved the image and went to the ChatGPT app (free on my phone). I typed “Please fact-check” and attached the image. (Yes, I like to say “please” and “thank you” to AI—just in case it becomes sentient and takes over the world. It will remember that I was one of the nice ones.)

One minute and 14 seconds later, I had a response.

Even the free “4o” model gave me a similar answer immediately—just with fewer cited sources.

Now comes the responsible part: Copy and paste the fact-checked response in the comments. Preface it by saying, “This is what ChatGPT returned when asked to fact-check this.” After learning the truth, I was no longer furious—I was thoughtful and calm, responding with reason, not emotion.

But Can We Trust AI?

Good question. A reasonable concern.

Let’s ask ChatGPT:

✅ Advantages of AI Fact-Checking (like ChatGPT)

-

Speed & Efficiency

AI can analyze and summarize reliable sources in seconds, cutting down the hours it might take to research manually.

-

Breadth of Knowledge

ChatGPT has access to a broad base of general knowledge and can often spot misleading claims or common hoaxes quickly.

-

Lack of Bias Toward a Single Source

When prompted correctly, AI can summarize multiple perspectives and cross-reference sources instead of relying on one news outlet or blog.

-

Pattern Recognition

AI is good at identifying recycled misinformation—like photos taken out of context or common logical fallacies—because it has "seen" millions of examples.

-

Explainability

ChatGPT can explain why a claim is questionable, breaking it down logically or scientifically in a way that’s clear and accessible.

-

Guardrails Against Misinformation

It has built-in mechanisms to flag conspiratorial or harmful narratives, helping prevent rabbit-hole spirals that sometimes happen with unguided research.

⚠️ But Why Not Trust Blindly?

While ChatGPT can help:

-

It’s still not perfect. Its knowledge cutoff may mean outdated info.

-

It doesn’t always cite sources unless prompted.

-

It can miss nuance or recent developments.(Bo's note: ChatGPT o3 addresses all of these, especially if you specifically ask for all sources)

So critical thinking is still essential, even with AI tools.

🤝 Best Practice: Combine Both

The smartest use is to start with ChatGPT to get a clear overview, then verify using trusted primary sources like:

-

News outlets with editorial standards

-

Government or institutional websites

-

Fact-checking organizations (e.g., Snopes, PolitiFact)

(Bo's note: the problem with this is "trusted primary sources" are very different for different ideologies. For example, one would think "nasa.gov" is reliable source, but not for flat-earthers who think they are all lying to us)

When I asked chatGPT about what sources it uses and how it determines what is trustworthy or not, it responded:

🧠 What Kinds of Sources ChatGPT (and tools like it) Use

I don’t browse the web or pull live data unless explicitly connected to a tool that can do so (like chatGPT 4.0 or o3), but when I fact-check or explain something based on training, I rely on a mixture of:

-

Authoritative Institutions

These include:

-

Government agencies (CDC, FDA, NASA, etc.)

-

International organizations (WHO, UN, World Bank)

-

Scientific and medical bodies (NIH, Mayo Clinic, Cochrane Reviews)

-

Peer-Reviewed Research & Academic Publications

-

Reputable News Organizations

-

Outlets with clear editorial standards and fact-checking procedures: e.g., Reuters, AP, BBC, The New York Times, The Wall Street Journal

-

Dedicated Fact-Checking Organizations

-

Snopes, PolitiFact, FactCheck.org, Reuters Fact Check, AFP Fact Check, etc.

-

Historical Databases and Encyclopedias

✅ How Trustworthiness Is Evaluated

In both training and real-time browsing tools (when enabled), the AI weighs source trustworthiness based on principles like:

| Criteria |

What It Means |

| Reputation |

Is the source widely respected and cited in academia, media, etc.? |

| Transparency |

Does it disclose sources, funding, authorship, and corrections? |

| Peer Review or Editorial Oversight |

Is there a review process to prevent errors or bias? |

| Evidence-Based |

Does it back claims with data or clearly indicate when it’s opinion? |

| Consistency |

Does it align with other credible sources on the same topic? |

Sources that fail these checks (e.g., conspiracy blogs, anonymous social media posts, outlets with a history of publishing hoaxes) are weighted much lower or excluded.

⚠️ Important Caveat

Even good sources can get things wrong, especially early in breaking news. That’s why multiple, independent confirmations are preferred—something AI is trained to recognize and emphasize when forming conclusions.

--- end output ---

Bottom line: The standards AI uses to evaluate sources are typically more rigorous than those used by individuals. It’s reasonable to trust the results while keeping an eye on the references if debate arises.

The Bigger Picture

What happens when more of us refuse to be tools for spreading misinformation and instead become responsible sharers of truth?

People and organizations will find it harder to circulate “falsehoods” (I want to say “bullshit,” but I’m trying to stay professional… so I won’t say “bullshit,” even though it is “bullshit”). Social pressure will build, demanding accuracy and accountability. We'll flip the script—using algorithms and platforms as tools for truth, the foundation of any journalistic integrity.

Your Task Is Simple:

-

Fact-check posts using AI before reacting emotionally.

-

If a post is false, deceptive, or misleading, post AI’s fact-checked output in the comments.

-

Report the post. On Facebook, click the three dots > “Report post” > “Scam, fraud, or false information.” Other platforms have similar options.

This usually takes less than a minute.

But by doing it, you're helping make the Internet a better, more truthful place.

You're helping build the informational golden age.